Google is already responding to 15% of searches using an artificial intelligence system called RankBrain (RB). Even though RB is only one of the 200 factors of Hummingbird, Google’s current algorithm, RB is considered to be the third most important signal in determining ranking behind content and links. RB is essentially a query interpretation model and a work in progress, intending to improve Google’s understanding of searcher intent.

The launch of the Hummingbird algorithm, in 2013, was already a fundamental shift in the way Google processed queries by placing greater emphasis on natural language and considering context and meaning over individual keywords. Its goal was to leverage 15+ years of data and user analysis that Google has collected to deliver contextually appropriate answers by searching for entities instead of keywords.

RB is using a machine learning method called Word2vec that groups keywords into semantic and syntactic concepts. An accuracy study (Altszyler et al., 2016) found that Word2vec outperforms other word-embedding technique (LSA) when it is trained with medium to large corpus size (more than 10 million words).

Does that actually change how we do SEO?

In a world where ‘content is king’ and backlinks are critical to better rankings, SEOs need to focus on creating unique, high-quality, unduplicated and utterly authentic content that is entertaining, useful and interesting.

Over time, domains must build a solid reputation based on the signals they want to serve, realizing that RankBrain creates an environment in which a site can become known for delivering a quality content that rewards content creators who create topic clusters. Google now looks at the scope of content being created by a given URL instead of an individual post as its own SEO opportunity.

Larry Kim, founder and CTO of WordStream, suspects that RankBrain’s “Relevance Score” is the same as AdWords’ “Quality Score.”

Google uses the Quality Score with AdWords, the Display Network, YouTube Ads and Gmail Ads. It’s been so successful that Facebook Relevance Score and Twitter Quality Adjusted Bids are largely the same concept. If Larry Kim is right the strategy to beating the Quality Score algorithm is just a matter of beating the expected click-through rate for a given ad spot.

Click-through rate (CTR) is the ratio of users who click on the link / number of total users who view a page, email or ads. It is commonly used to measure the success of an online advertising campaign.

In most cases, a 1.5% – 2% click-through rate would be considered successful, though the exact number is debated and varies depending on the situation.

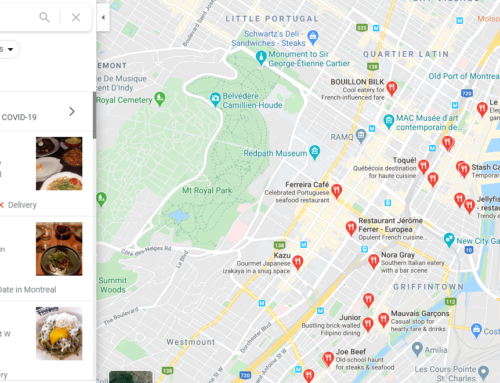

Basically, SEOs need to strive to have higher than anticipated or expected engagement metrics, based on a variety of criteria, including query, device type, location and time of day.